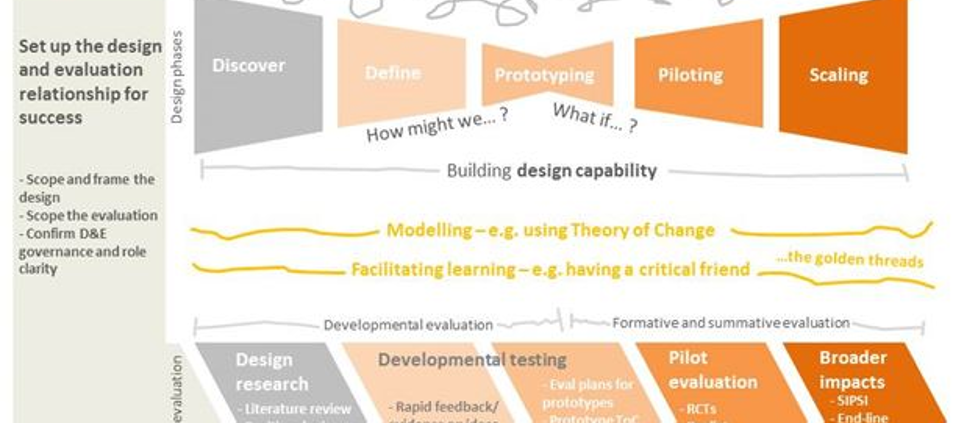

Last month Clear Horizon and The Australian Centre for Social Innovation (TACSI) had a great time delivering our new and sold out course on reconciling the worlds of Human Centred Design (HCD) & evaluation. Hot off the press, we’re proud to introduce the Integrated Design, Evaluation and Engagement with Purpose (InDEEP) Framework (Figure 1) which underpinned the course.

(Figure 1)

The InDeep Framework

The Integrated Design, Evaluation and Engagement (InDEEP) Framework (Figure 1) has been developed through reflection on two years of collaboration between TACSI (the designers) and Clear Horizon (the evaluators). At a high level the InDeep Framework conceptualises relationships between design and potential evaluation. Simply, the top half of the diagram sets out the design cycle in five phases (discover, define, prototyping, piloting and scaling). The bottom half of the diagram lists potential evaluative inputs that can be useful at each design phase (design research, developmental testing, pilot evaluation and broader impacts).

The journey starts by setting up the design and evaluation relationship for success, by carefully thinking through governance structures, role clarity and scope. In relation to scope consideration should first be given to the both which design phase the design project is currently in, or where it is expected to be when the evaluation occurs. Once the design phases of interest have been diagnosed the different types of evaluation needed can be thought through. Its definitely a menu of types of evaluation, and if you chose them all you’d be pretty full!

If the design project is in the discover to early prototyping phases it is likely that developmental evaluation approaches will be appropriate, in these phases evaluation should support learning and the development of the ideas. If the design project has moved to the late prototyping through to broader impacts phases’ then more traditional formative and summative evaluation may be more appropriate, in these phases there is more of an accountability towards achieving outcomes and impacts.

The InDEEP framework also acknowledges that there are some evaluative tools that are useful at all phases of the design cycle. In the diagram these are described as golden threads and include both modelling (e.g. Theory of Change) and facilitating learning (e.g. having a critical friend). If needed process and/or capability evaluation can also be applied at any design phase.

Key insights from the course

Course participants came from different sectors and levels of government. They represented a cohort of people applying UCD approaches to solve complex problems (from increasing student engagement in education through to the digital transformation of government services). At some level everyone came to the course looking for tools to help them demonstrate the outcomes and impact of their UCD work. Some key reflections from participants included:

- Theory of Change is a golden thread

- Evaluation can add rigour and de-risk design

- It’s important to quarantine some space to for a helicopter view (developmental evaluation)

- Be ready to capture your outcomes and impact

Theory of Change is a golden thread

Theory of Change proves to be one of our most versatile and flexible tools in design and evaluation. In the design process it’s able to provide direction in the scoping phase (broader goals), absorb learnings and insights in the discover phase (intermediate outcomes), and test out theories of action during prototyping. When you’re ready for a more meaty evaluation, Theory of Change provides you with a solid evaluand to: refine, test and or prove in the piloting and scaling phases. At all stages it surfaces the assumptions and is a useful communication devise.

Evaluation can add rigour and de-risk design

UCD is a relatively new mechanism being applied to policy development and social programming. It can take time for UCD processes to move through the design cycle to scaling and it is also assumed that some interventions may fail; this has the potential to make some funders nervous. Participants confirmed that developmental approaches which document key learnings and pivot points in design can help to communicate to funders what has been done. More judgmental process and capability building evaluation can also assist demonstrate to funders that innovation is on track.

It’s important to quarantine some space to for a helicopter view (developmental evaluation)

The discover, define and early prototyping phases are the realm of designers who primarily need space to be creative and ideate (be in the washing machine). In these early phases developmental evaluation can enable pause points for the design team to, zoom out, take stock of assumptions, and make useful adjustments to the design (a helicopter view of the washing machine). Although participants found the distinction between design and developmental evaluation useful they took away the challenge that design teams did not often distinguish the roles. One solution to this challenge was to rotate the developmental evaluation role within design teams, and or, resources permitting, bring an external developmental evaluator onto the design team.

Be ready to capture outcomes and impact

As designs move into late prototyping, piloting and scaling, teams come under increased pressure to document outcomes and impact. In many instances this is in some part to show funders what has been achieved through the innovation process. The key message for participants was that it was important to plan for capturing outcomes early on. One way to be ready is to have a Theory of Change. If you are expecting to have a population level impact it may also be important to set up a baseline early on. Equally if you are chasing more intangible outcomes like policy change then you should think through some techniques like Outcomes Harvesting and SIPSI so that you are ready to systematically make a case for causation.

We would love your feedback on our InDeep Framework, to join the conversation tweet us at @ClearHorizonAU. In you are interested in learning more we are running our InDEEP training again early next year, see our public training calendar for more details.

Tom Hannon and Jess Dart